Executive summary

DeepSeek and Gemini are optimized for different “default jobs” in 2026. DeepSeek is best understood as a cost-efficient general LLM/API (with thinking and non-thinking modes) plus a consumer app; it becomes a serious “search assistant” only if you build or self-host the retrieval layer. Gemini (from Google) is best understood as a search- and mobile-native ecosystem: consumer experiences (Gemini app + Gemini Live) are deeply oriented around voice/vision and real-time information; the developer platform has first-party grounding with Google Search, built-in code execution/file search tools, and a 1M-token class context window for key models.

For the question “Which is better for search, coding, and mobile?”:

- Search (web + citations): Gemini’s built-in search grounding is the decisive advantage for most teams that need reliable, citable, up-to-date answers without building their own retrieval stack. DeepSeek can compete if you integrate a search API and enforce grounding yourself via tool calls, but that is engineering work and operational risk you own.

- Coding (IDE + agent workflows): Gemini has an unusually complete “coding surface area” in 2026: Gemini Code Assist for VS Code/JetBrains plus Gemini in Android Studio (code completion, transforms, tests, UI generation, etc.). DeepSeek’s advantage is API economics and an ecosystem of open-weight checkpoints (useful for private/self-hosted coding assistants), but it lacks a comparably documented, first-party IDE product suite.

- Mobile (voice/vision + on-device): Gemini is substantially stronger for mobile-first usage: Gemini Live supports natural voice plus camera/screen sharing and is positioned explicitly as a mobile experience across Android/iOS. Gemini also has a real on-device story via Gemini Nano integrated into Android’s AICore service for low-latency, offline-capable use cases. DeepSeek’s mobile app exists and is actively updated, but official materials do not position it as an on-device model or a first-class voice/vision assistant in the same way.

A risk that often dominates decisions: DeepSeek’s privacy policy states personal data is processed and stored in the People’s Republic of China, which can be unacceptable for certain government/regulatory environments. For Gemini, privacy/retention depends on “Apps” vs Cloud/Workspace paths, with explicit controls like Temporary Chats and enterprise-grade governance claims for Workspace/Cloud offerings.

Methodology and limitations

This comparison is based on primary sources (2024–2026) where available: official product pages, developer documentation, official pricing pages, app store listings, and primary benchmark reports. When a “primary” numeric benchmark was not published (notably for search relevance and mobile latency/battery), the report marks that data as “unspecified” and uses the closest available proxy (e.g., grounding/citation features, or third-party benchmark boards that report a clearly defined methodology).

Key benchmark sources used include:

- DeepSeek model papers (DeepSeek‑V3 technical report and DeepSeek‑R1 paper).

- Vectara hallucination leaderboard (summarization hallucination rate; last updated Feb 17, 2026) and related posts explaining methodology.

- Gemini 2.5 reporting from Google DeepMind and official Gemini model documentation/pricing pages.

News sources (used sparingly and clearly labeled as such) include Reuters for changes to “AI mode” in search.

Product overviews

DeepSeek in 2026

DeepSeek’s public site positions DeepSeek‑V3.2 as “reasoning-first models built for agents,” available on web, app, and API with “free access” messaging for end users.

For developers, the DeepSeek Open Platform documents an API base URL and two primary API model identifiers:

deepseek-chat(non-thinking mode)deepseek-reasoner(thinking mode)

Both are listed as DeepSeek‑V3.2 with a documented 128K context length in the API.

DeepSeek’s developer surface emphasizes classic LLM integration patterns: JSON output, tool calls, and (in beta) code-completion modes such as FIM/prefix completion depending on model.

Gemini in 2026

Gemini spans three layers:

- Consumer/mobile: The Gemini app is available on Android and iOS, with “Gemini Live” positioned as real-time voice assistance with camera/screen sharing.

- Developer API: The Gemini API supports multiple model families (e.g., Gemini 2.5 Pro/Flash/Flash‑Lite), long context (often 1,048,576 input tokens for key models), and a set of built-in tools (code execution, file search, Google Search grounding, Google Maps grounding—model-dependent).

- On-device: Gemini Nano is an on-device model integrated into Android’s AICore system service, explicitly framed for low-latency, on-device inference and offline-capable experiences for certain use cases.

Detailed feature comparison for search, coding, and mobile

Feature comparison table

| Capability (search/coding/mobile focus) | DeepSeek | Gemini |

|---|---|---|

| Default “search” mode (real-time web grounding + citations) | Not first-party in DeepSeek API/app docs; you typically implement via tool calls + your own search provider + citation policy. | First-party Grounding with Google Search for real-time info and citations (Gemini API / Vertex AI docs). |

| Built-in retrieval / knowledge base tool | Not documented as a first-party built-in vector store; typically “bring your own retrieval” through tools. | Built-in “File search” is listed as supported for Gemini 2.5 Pro/Flash and similar models on the models page. |

| Context window | DeepSeek API docs list 128K context for V3.2. | Gemini 2.5 Pro/Flash/Flash‑Lite list 1,048,576 input token limit and up to 65,536 output tokens (model-dependent). |

| Code execution tooling | Tool calls supported (you run the tool). No first-party “code execution sandbox” is documented. | “Code execution” is listed as supported for Gemini 2.5 Pro/Flash/Flash‑Lite in the model capabilities table. |

| IDE integrations | No official DeepSeek IDE plugin is clearly documented in official sources reviewed (unspecified). | Gemini Code Assist supports VS Code + JetBrains IDEs; separate Gemini in Android Studio features (completion, transforms, tests, etc.). |

| Code generation quality (published benchmarks) | DeepSeek-V3 and DeepSeek-R1 papers report competitive coding metrics, including SWE-bench Verified figures (see benchmark section). | Google reports SWE-bench Verified for Gemini 2.5 Pro with a custom agent setup; also appears on SWE-bench leaderboards (see benchmark section). |

| Mobile app voice/vision | App exists and is updated, but official public pages reviewed do not clearly document voice-first + camera/screen-sharing workflows (unspecified). | Gemini Live supports natural voice plus camera/screen sharing; available broadly across countries/languages and on iOS/Android. |

| On-device / offline option | Not documented as a first-party on-device model in official DeepSeek product docs; open-weight models can be self-hosted (including locally) depending on deployment skills/hardware (practicality on phones varies; unspecified). | Gemini Nano is explicitly marketed for on-device inference via AICore, including offline-capable use cases. |

| Privacy/data residency signals for consumer mobile use | Privacy policy states personal data is processed and stored in the People’s Republic of China. | Gemini Apps have a dedicated privacy hub; data shared with other Google services follows Google Privacy Policy and can be used for product improvement; privacy controls like Temporary Chats are documented. |

Search-focused analysis

Gemini’s strongest search advantage is first-party grounding with Google Search: it is explicitly designed to reduce hallucinations, access real-time information, and provide citations. On the product side, Google has also tested an AI-forward “AI Mode” search experience (subscription-gated in early testing) with links/citations, indicating institutional investment in Gemini-powered search experiences beyond chat.

DeepSeek, by contrast, is best treated as a high-quality generation engine: it supports tool calls and structured outputs, but does not advertise a built-in “web search grounding” facility in the core Open Platform model table. In practice, “DeepSeek for search” usually means you (a) call a search API, (b) feed results back into the model, and (c) implement citation/attribution rules. (This can be excellent, but it’s not turnkey.)

Coding-focused analysis

Gemini’s coding advantage is breadth of developer experience:

- Gemini Code Assist is documented for VS Code and JetBrains IDEs and is positioned explicitly as an IDE collaborator for code generation and transformation.

- Gemini in Android Studio adds code completion and broader IDE workflows (tests, transformations, UI generation, etc.), with explicit notes that contextual codebase information may be sent to the LLM to improve suggestions.

- The Gemini API additionally lists code execution and file search as supported capabilities for major models, complementing IDE use with agent-like application features.

DeepSeek’s coding story is primarily:

- API economics + long context for codebase analysis and generation (128K in API docs).

- Research strength and open ecosystem: DeepSeek’s model papers report strong coding benchmark performance, and open repositories enable deep customization/self-hosting when feasible.

Mobile-focused analysis (including on-device)

Gemini’s mobile differentiation is unusually clear in first-party materials:

- Gemini Live is explicitly positioned as a mobile experience, including real-time spoken responses and the ability to share camera or screen context.

- Gemini Nano is explicitly intended for on-device use, integrated via Android’s AICore service; official Android developer docs emphasize low inference latency and leveraging device hardware.

DeepSeek’s mobile presence is real (the official Android app is actively maintained with recent updates), but first-party public materials reviewed do not document an equivalent on-device model path or a Gemini-Live-style voice/vision experience.

Battery/CPU considerations are therefore structurally different:

- Gemini Nano (on-device): energy/thermal impact depends on device and use case; primary sources emphasize hardware acceleration/low-latency but do not provide standardized battery benchmarks (unspecified).

- Cloud-chat (Gemini API / DeepSeek API): the heavy compute is server-side; mobile cost is primarily network + UI + any local pre/post-processing (official battery metrics unspecified).

Pricing and quotas

Pricing comparison table

| Pricing area | DeepSeek | Gemini |

|---|---|---|

| Consumer app | “Free access to DeepSeek‑V3.2” is promoted on the main site; no official paid tier price was found in reviewed sources (unspecified). | Multiple consumer subscription tiers are published: e.g., Google AI Plus ($7.99/mo), Google AI Pro ($19.99/mo), and an Ultra tier shown in some regions (e.g., £234.99/mo in UK listing). Availability and price vary by region. |

| Developer API (headline text models) | DeepSeek‑V3.2 API price: $0.28 / 1M input tokens (cache miss), $0.028 / 1M input tokens (cache hit), $0.42 / 1M output tokens. | Gemini 2.5 pricing examples (paid tier): Flash‑Lite $0.10 in / $0.40 out, Flash $0.30 in / $2.50 out, Pro $1.25 in / $10.00 out (≤200k prompt tier; higher for >200k prompts). Free tier exists for some models. |

| Context caching (API economics) | Cache hit is priced at 10× cheaper than cache miss for inputs per published table. | Gemini pricing includes context caching prices and a storage price (per 1M tokens per hour) for cached content; rates vary by model. |

| Search grounding (API add-on) | Not documented as a first-party product line item; you bring your own search stack and bear its costs. | Grounding with Google Search is priced separately after free quotas (e.g., “$35 / 1,000 grounded prompts” for some models; other rows show “$14 / 1,000 search queries” depending on feature). |

| Coding assistant (IDE) | First-party pricing for an official IDE assistant is not published in reviewed sources (unspecified). | Gemini Code Assist: Individuals is $0; Standard is listed at $19/user/month (annual upfront) and Enterprise $45/user/month (annual upfront), with Cloud pricing also given as hourly license fees. |

| Quotas / limits | DeepSeek quotas/rate limits are not clearly enumerated in the pricing table (unspecified). | Gemini API has a dedicated rate limits page and tiered billing; Code Assist has a quotas/limits page covering Code Assist + Gemini CLI. |

Practical cost notes

DeepSeek’s pricing is extremely output-friendly compared with many premium models: for large code refactors, long explanations, and high-volume automation that generates lots of tokens, this often becomes the dominant driver of TCO—especially if you can exploit cache hits on repeated prefixes.

Gemini’s pricing is more nuanced: Flash‑Lite is very inexpensive, while Pro is substantially more expensive (particularly for large prompt sizes), and search grounding can introduce an additional per-prompt charge past free quotas.

Benchmarks and performance evidence

Coding correctness benchmarks

SWE-bench Verified is one of the most cited “real-world coding” evaluations, but comparisons must be handled carefully because providers often use different scaffolding/agent setups.

Published reference points (selected):

| Benchmark | DeepSeek | Gemini |

|---|---|---|

| SWE-bench Verified (resolved) | DeepSeek‑R1 paper reports 49.2 (Resolved). DeepSeek‑V3 technical report figure includes an SWE-bench Verified value for DeepSeek‑V3 (reported alongside other models). | Google reports 63.8% for Gemini 2.5 Pro on SWE-bench Verified with a custom agent setup. SWE-bench official leaderboards also list Gemini 2.5 Pro entries under particular scaffolding/filter conditions. |

| Code competition (Codeforces) | DeepSeek‑R1 reports a 2029 Elo Codeforces rating (competition framing). | Not specified in official Gemini materials reviewed (unspecified). |

Interpretation: Gemini 2.5 Pro appears stronger on publicly cited SWE-bench Verified numbers when using optimized agent scaffolding, while DeepSeek‑R1 remains highly competitive especially given its cost profile; the raw numbers should not be treated as fully apples-to-apples due to scaffolding differences explicitly noted in Gemini reporting.

Hallucination rates (summarization proxy)

For hallucinations, a widely referenced cross-model proxy is Vectara’s leaderboard measuring hallucination rates when summarizing documents (a narrower task than general Q&A, but useful for comparing “fabrication tendency” under constrained evidence).

Selected values (Vectara hallucination leaderboard; last updated Feb 17, 2026):

- Gemini 2.5 Flash‑Lite: 3.3%

- Gemini 2.5 Pro: 7.0%

- Gemini 3 Flash Preview: 13.5%

- Gemini 3 Pro Preview: 13.6%

- DeepSeek‑V3.2: 6.3%

- DeepSeek‑V3.2‑Exp: 5.3%

- DeepSeek‑R1: 11.3%

A key pattern from this benchmark: some “reasoning/heavier” models can hallucinate more on summarization tasks than lighter models; DeepSeek‑R1 vs DeepSeek‑V3 was also highlighted by Vectara in earlier board analysis posts.

Search relevance benchmarks

A standardized, widely accepted numeric benchmark for “search relevance” in consumer assistant workflows (i.e., query understanding + retrieval + summarization + citation quality) is not consistently published across both vendors in primary sources (unspecified).

What is objectively documented is capability-level leverage:

- Gemini supports grounding responses in Google Search for real-time information and citations (API/Vertex AI docs).

- DeepSeek’s API model table does not document an equivalent first-party web-search tool; web grounding requires customer-built tooling.

Latency and throughput (mobile and server)

Mobile (on-device): Android’s Gemini Nano documentation emphasizes AICore integration that leverages device hardware and “low inference latency,” but does not publish standardized latency/battery benchmarks (unspecified).

Server/API: Official fixed p95 latency/throughput guarantees are not published in the pricing/model docs reviewed (unspecified). A third-party report (VentureBeat) cited pre-release throughput testing figures, but these are not primary vendor benchmarks and should be treated as indicative only.

Migration and integration considerations

API integration and migration steps

The biggest integration decision is whether your product depends on first-party tools (search grounding, file search, code execution) vs “bring-your-own toolchain.”

- If your application’s differentiator is search grounding + citations, Gemini is architected to make that a first-class API feature (with explicit billing and quotas), which reduces custom infrastructure work.

- If your differentiator is cheap high-volume generation, DeepSeek’s cost structure is straightforward and favorable, and you can implement retrieval/tooling externally.

Practical migration patterns:

- DeepSeek → Gemini (search-first upgrade)

- Replace tool-call-based “web search” with Gemini’s Google Search grounding (and ensure you now handle grounding quotas + costs).

- If you used your own vector store, evaluate whether Gemini’s “file search” tool meets your needs or keep your existing RAG.

- Gemini → DeepSeek (cost-first consolidation)

- Rebuild any reliance on built-in search grounding, file search, or code execution as external services called via DeepSeek tool calls.

- Add an explicit citation pipeline (e.g., attach the source snippets you retrieved and require the model to cite them). (Mechanism is implementation-specific; official DeepSeek citations feature is unspecified.)

IDE/plugin ecosystems

If your “coding experience” is primarily inside IDEs:

- Gemini has documented surfaces across VS Code/JetBrains and Android Studio, plus a clear pricing structure for both individuals and business tiers.

- DeepSeek does not publish an equivalent first-party IDE product in sources reviewed (unspecified), so adopting it for IDE workflows usually means relying on community tooling or building a plugin that calls your own backend.

Mobile integration and on-device strategy

Gemini’s mobile story can be split into two distinct integration modes:

- Cloud Gemini API in a mobile product (standard client-server pattern; you typically proxy calls through your server for key security). Gemini API includes model/tool capabilities and a rate limits framework.

- On-device Gemini Nano on Android for offline/low-latency workflows (availability depends on device/OS support).

DeepSeek’s practical mobile integration is typically cloud-only (call DeepSeek through your server). An on-device strategy is only realistic if you commit to self-hosting open-weight models and deploying them in a mobile-compatible runtime—this is not described as a standard DeepSeek mobile offering in official sources (unspecified).

Strengths, weaknesses, and decision framework

Strengths and weaknesses focused on search, coding, and mobile

DeepSeek strengths:

- Excellent published API price/performance for token-intensive workloads.

- Strong research performance reports on reasoning/coding tasks in primary papers.

- Adaptability for self-hosting and customization via open ecosystem artifacts (project/repo availability varies by model; DeepSeek-R1 is publicly released).

DeepSeek weaknesses:

- Search assistant capability is not turnkey without building your own retrieval/citation layer.

- Mobile privacy/data residency risk can be a blocker: policy states storage/processing in China.

- First-party IDE/mobile voice-vision UX is not comparably documented in public sources (unspecified).

Gemini strengths:

- Best-in-class “search-native” features: Google Search grounding with citations is a first-class API feature.

- Strong mobile UX and multimodal assistant features via Gemini Live (voice + camera/screen context).

- A real on-device path via Gemini Nano/AICore for offline-capable and low-latency Android use cases.

- Broad coding surface area (IDE assist + Android Studio integration + API tools).

Gemini weaknesses:

- Pricing can become expensive for long outputs on higher-end models (e.g., Pro) and grounding can add additional charges past free quotas.

- Hallucination rates vary strongly by model family on summarization-focused evaluation: Gemini 3 preview models score notably higher hallucination rates than Gemini 2.5 Flash‑Lite in Vectara’s measure.

- Mobile + app usage policies can be complex (Apps vs Workspace vs Cloud); retention and training usage differ by mode and settings (details vary and should be confirmed for your account type; some aspects unspecified).

Decision matrix for search, coding, and mobile

| Priority | Better default choice | Why |

|---|---|---|

| Web search with citations, fast path to production | Gemini | Built-in grounding with Google Search and citation support reduces custom engineering. |

| Cheapest scalable generation (especially high output) | DeepSeek | Very low per-token pricing with cache-hit advantage; straightforward billing. |

| IDE-centric coding assistance | Gemini | First-party Code Assist for IDEs + Android Studio integration + published pricing tiers. |

| Mobile-first voice/vision assistant UX | Gemini | Gemini Live is explicitly designed for voice + camera/screen sharing on mobile. |

| On-device/offline Android experiences | Gemini | Gemini Nano via AICore supports on-device, offline-capable use cases. |

| Strict data residency / geopolitical constraints (mobile) | Depends (often favors Gemini for many orgs) | DeepSeek policy states China storage/processing; many orgs will prefer vendor options that align with their governance requirements. (Exact residency options for Gemini depend on product contract/path; unspecified.) |

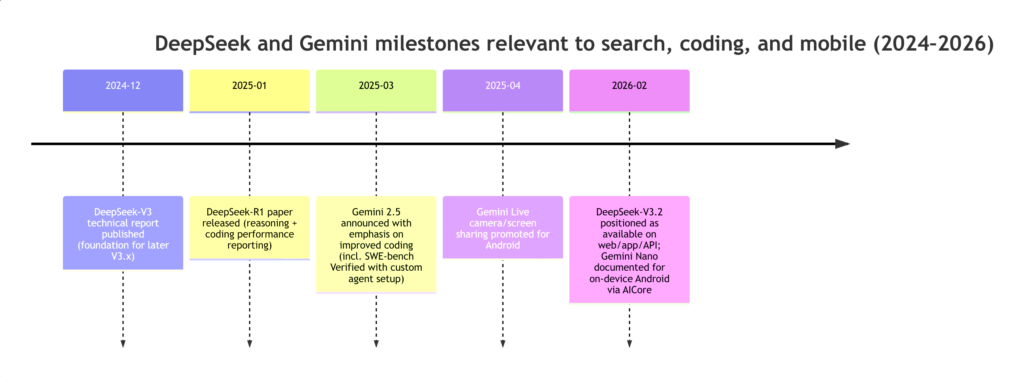

Product timeline chart

The following milestones are representative, not exhaustive; they anchor the 2024–2026 comparison window.

Actionable recommendations

If you care most about search

Choose Gemini if you need:

- Real-time web answers with built-in citations and reduced hallucination risk through Google Search grounding, without building a custom search+RAG system.

- A product path that aligns with “AI-native search” evolutions (e.g., AI Mode experiments in search).

Choose DeepSeek if:

- You already operate a retrieval stack (search engine, indexing, RAG) and want the cheapest strong generator behind it; treat DeepSeek as the reasoning/generation engine and keep your search provenance rules external.

If you care most about coding

Choose Gemini if you want the fastest time-to-value for developers:

- Gemini Code Assist (individuals free, business tiers) plus Android Studio integration covers many day-to-day workflows without custom tooling.

Choose DeepSeek if:

- You are building an internal coding agent where token cost is the dominant constraint, or you plan to operate an on-prem / highly customized assistant using the open ecosystem (and can invest in engineering + governance).

If you care most about mobile

Choose Gemini for mobile-first assistants:

- Gemini Live (voice plus camera/screen sharing) and Gemini Nano on-device options create a uniquely strong mobile stack.

Be cautious with DeepSeek on mobile in regulated contexts:

- The data residency posture (China storage/processing) can be disqualifying for certain organizations and geographies, regardless of model quality or cost.